CodeSpeed (Godspeed _ Programmers)

Everyone loves speed.

And so does your next-door Python programmer.

But, have you heard of the predicament of Python developers when it comes to speed and performance?

You must have, if you happen to be a developer yourself, or come from a Platform / Product Engineering background, where interfacing with the programmers is a bread-butter routine for you, or an occasional red pill — :p

I shall dwell majorly on Python’s speed and performance aspects today.

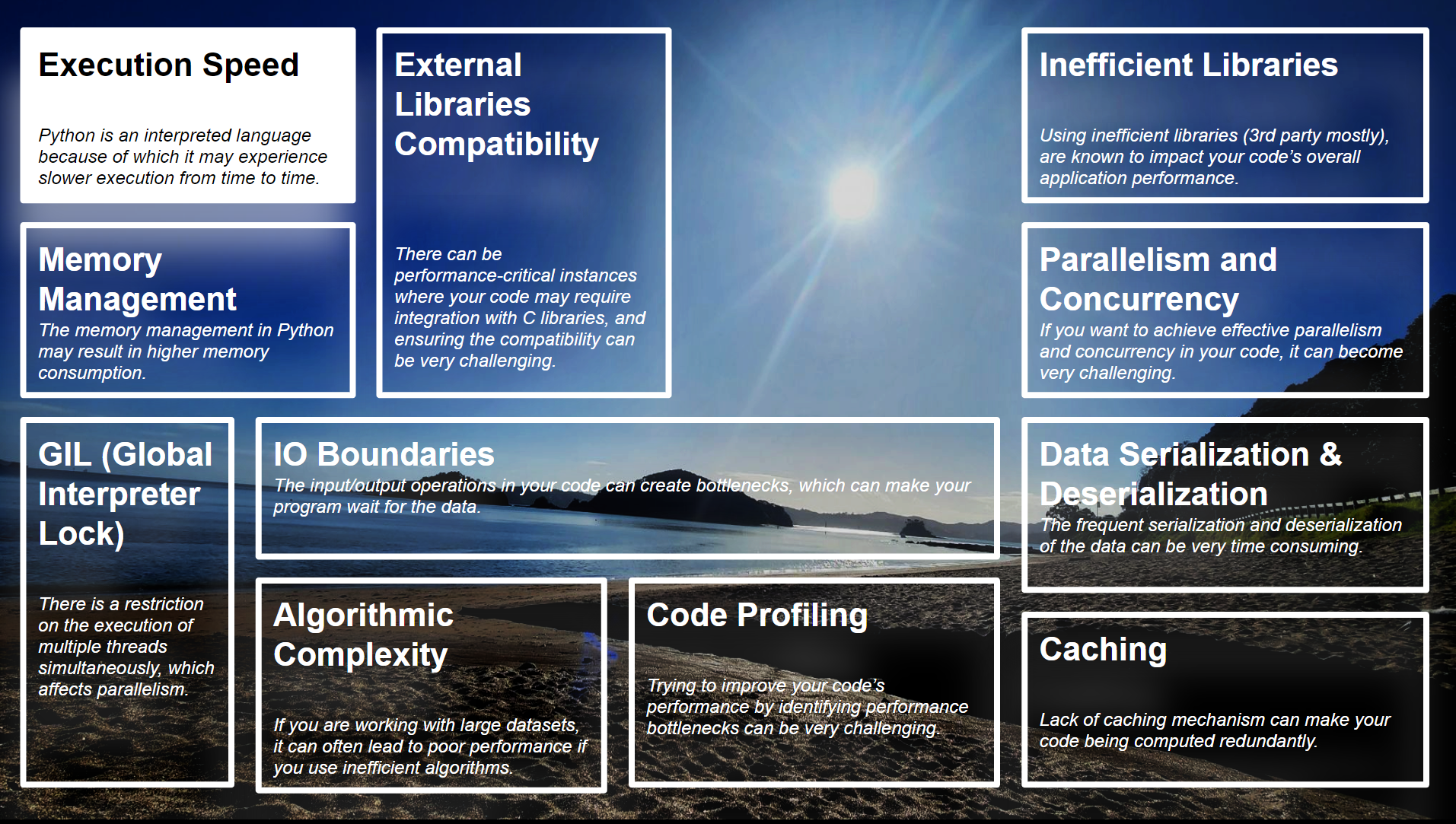

However, before I do that, here are some challenges, when looked holistically, faced by Python programmers (or coders in the relatable lingo :p )

The above picture hosts all the possible challenges, a Python programmer for instance, faces in their day to day programming routine. In some other article, I shall get into the depth of each of these challenges.

Today, let’s enjoy our coffee over Execution Speed.

Execution Speed

Contrary to the compiled languages, Python being an interpreted language, takes a fair (read as occasionally substantial too) amount of time when it comes to execution. There have been solutions around this problem of execution and being adopted, while we are talking!

For example — Using Just-in-time (JIT) compilers like PyPy or optimizing critical sections using Cython to achieve better performance.

However, if there is something that is dancing off the top of my head, deserving immediate attention is the :-

Use of Vectorization over Loops in PLs

It is so common to use loops in our codes, the moment there is a use-case of repetitive operations in any of our programming problem statements.

The real test of a loop-like iteration is when it is subjected to massive datasets. For instance, if your data has records in billions / millions, and you have to iterate through those, using loops will almost be like “taking the slow boat to China.”

And that is exactly where implementing Vectorization becomes the need of the hour.

If I were to explain Vectorization :-

It is the process of performing the Operations on the datasets by utilizing NumPy arrays. Or to put it in other words, it is a technique of applying operations on the entire arrays or matrices, instead of individual elements. It is a way to express operations as if they were performed on entire arrays instead of iterating through each element using loops.

Quick take-aways around NumPy & Vectorization :-

- The biggest area of applications of Vectorization is Numerical and Scientific Computing.

- As it would have already come out apparent that the library in Python which enables Vectorization is NumPy.

- NumPy provides a powerful array object called ‘numpy.ndarray’ and associated functions that will operate on entire arrays at once.

- A quick illustration of Vectorization in NumPy :-

import numpy as np

# Creating two NumPy arrays - array_1 and array_2

array_1 = np.array([10, 20, 30, 40])

array_2 = np.array([15, 25, 35, 45])

# Vectorized addition of the two arrays

sum_arrays = array_a + array_b

print(sum_arrays)On printing the sum_arrays, we will see [25, 45, 65, 85].

- The addition ( + ) operator is implemented element-wise, without the need of using explicit for loops.

- Vectorization is extremely crucial for optimizing scientific and numerical computations, making them more concise and efficient.

- By taking advantage of low-level, optimized routines implemented in compiled languages (like C and Fortran) that underlie NumPy, using Vectorization in your programs, gives a performance boost to the overall execution, compared to traditional looping constructs in Python.

As it is seen in the snapshot from my Colab NB, the Vectorization method is a whooping 58.55 times faster than the method of loops.

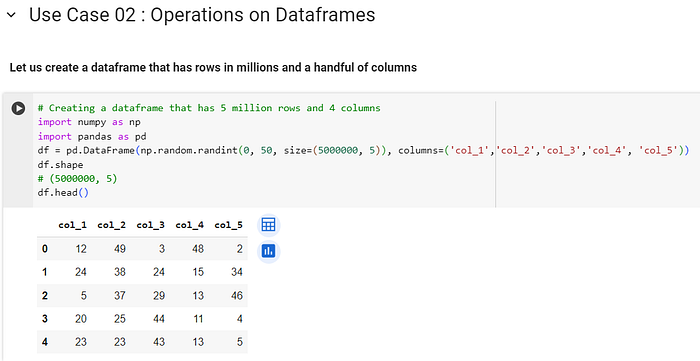

We can also try to validate the advantages of Vectorization over loops, through operations on Dataframes : — — — — —

Many a times, programmers tend to turn towards loops for feature engineering. Or to take a simpler use-case, they use for loops to iterate through the countless rows of a data-frame; creating new derived columns using mathematical operators.

Let us create a data-frame, to demonstrate the idea : — — —

Looking at the amount of time, loops will take to create a new column — ‘col_6’ as a product of the columns ‘col_4’ and ‘col_5’.

It takes a massively high ~370 seconds to create a new column using loops.

Doing the same operation using Vectorization :-

When using Vectorization, it just takes 0.05 seconds, which is approximately ~7000 times faster than the method of loops.

Use of Parallel Processing

Another effective player on the scene is Parallel Processing in Python. Contrary to processing the data structures in a serial format, modules / methods like Pool under the “multiprocessing” package, for e.g., processes the data parallelly, thus saving on time and compute.

However, the caveat is that if your data size is small, you may not get the results as per your expectation. As you can see below, that the time taken by serial processing of a 10 elements long list is approximately 3.34 seconds (with a multiplicative factor of 1000000 for the ease of demonstration. You can divide the result by 1000000 at your end for actual analysis).

On the other hand the time taken by parallel processing is a whooping 28376 seconds (just divide by 1000000 at your end). This is clearly not what we expected.

The parallel processing is instead 8496 times slower than serial processing. (Read that in red, for the lack of colors on Medium :p )

So, now when I increase the size of the list of the random numbers to 100 million, the difference in time taken becomes much smaller for the serial and parallel processing. I removed the multiplier this time, since the size of the list has been increased.

The time taken by serial processing is 12.99 seconds and by parallel processing is 42.27 seconds.

This was just a linear one-dim list of 100 million random numbers. As you make your data bigger in terms of length / dimensionality / overall size, etc. we will reach a point where the parallel processing takes much lesser time as compared to serial processing.

We should also talk about Caching IMO. Memoization, you know!

I will come back tomorrow on this — — —

Se you around :)