Give me an explanation (Alibi)

Nothing to fret.

No ‘body’ owes me an explanation.

My Colab notebook does, though.

I was just working on a Random Forest Classifier today.

You know about it, I bet.

Ummm — no….

The pockets aren’t much heavy these days. So, no betting. If you aren’t aware — this small piece might help you with a revisit. So this one’s a breather on Decision Tree Classifiers — https://shivamdutt606.medium.com/decision-tree-classifiers-lets-talk-about-them-3df1ddeb1255

While RFC’s are an ensemble of multiple Decision Tree Classifiers, so for a very microscopic perusal of RFC per se, please go for this one — https://towardsdatascience.com/random-forest-explained-a-visual-guide-with-code-examples-9f736a6e1b3c/

My Data Science safari started in 2020, and ever since I have been working on it, I often feel if there was ever a tool that could give a fair explanation of what is going on with the model, YKWIM.

For a very long time, I did not know about Alibi and its capabilities.

The Alibi library is used for Machine Learning (ML) model explain-ability and interpretability. It helps understand why a model makes certain predictions, which is crucial for trust, debugging, and fairness in AI systems.

Below is the code where I am trying to create a basic RandomForestClassifier model.

I have used the iris dataset.

KernelShap (that you’d see below in the code) is a model-agnostic explainer from the Alibi Explainability library that estimates SHAP (SHapley Additive exPlanations) values using Kernel SHAP, a variant of the SHAP framework.

What model-agnostic essentially means here is that it is designed to work with black-box models by approximating feature attributions using a kernel-based approach. And by black-box, I’d mean that these are those ML models, where understanding what is happening behind the scenes — like the internal logic, computation processes, decision making process, etc. is too complex and is not easily interpretable by humans.

import pandas as pd

from alibi.explainers import KernelShap

import numpy as np

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

# Load dataset

iris = load_iris()

X, y = iris.data, iris.target

# Split dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train a Random Forest classifier

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)I then thought of using an explainer from the family of Alibi’s explainers, as you’d see in the code below — AnchorTabular.

AnchorTabular is an explain-ability method from the ALIBI library, designed to provide high-precision, human-interpretable explanations for tabular data models. It is based on “Anchors: High-Precision Model-Agnostic Explanations” by Ribeiro et al. (2018).

I remember that during my early days of Machine Learning — when I was pursuing PGP in AI/ML at NIT Warangal, all those heavy, cryptic, overwhelming, extra-terrestrial lines of output, once you execute a block of ML Python code, used to get me all bonkers.

Had I known about Alibi already, life might have been simpler, back then.

For instance, today when working on a Random Forest Classifier, when I trained the model as seen in the code above — model = RandomForestClassifier(n_estimators = 100, random_state = 42)

I, like always, wondered what is happening behind that model I trained and then further when I fit it with X_train (the independent features) and y_train (the dependent feature); how is the computation happening abstractly, explained?

I just told you the problem.

And now, the solution — — — —

6 lines of code and it tells you everything about what’s happening inside your ML model or rather I’d say precisely, about the predictions coming out of your model.

from alibi.explainers import AnchorTabular

# Initialize the explainer

explainer = AnchorTabular(predictor=model.predict, feature_names=iris.feature_names)

explainer.fit(X_train)

# Select an instance to explain

instance = X_test[0].reshape(1, -1)

# Generate the explanation

explanation = explainer.explain(instance)

print(explanation)First and foremost — import the god-sent library.

from alibi.explainers import AnchorTabular

To recall once again — AnchorTabular is a local model explanation method that generates "anchors"—rules. These rules provide information on why a particular model made a specific prediction for a given instance (a single row of data).

And while I mention that, it rings a bell. A question from my PCAP exam about the ideal ways of importing a class/method from a package.

Anyway, moving ahead now.

We create an explainer object next, or, what you may say — initializing the explainer.= :-

explainer = AnchorTabular(predictor=model.predict, feature_names=iris.feature_names)

— —

Then we fit the training dataset in the explainer :-

explainer.fit(X_train)

— —

Then we select an instance to explain :-

instance = X_test[0].reshape(1, -1)

Here — we have selected the 0th record in the test dataset and reshaped it (1, -1).

— —

Next, we generate the explanation and print it :-

explanation = explainer.explain(instance)

print(explanation)

— —

How does the raw explanation (results) look like? Like, when you do print(explanation) :-

Explanation(meta={

'name': 'AnchorTabular',

'type': ['blackbox'],

'explanations': ['local'],

'params': {

'seed': None,

'disc_perc': (25, 50, 75),

'threshold': 0.95,

'delta': 0.1,

'tau': 0.15,

'batch_size': 100,

'coverage_samples': 10000,

'beam_size': 1,

'stop_on_first': False,

'max_anchor_size': None,

'min_samples_start': 100,

'n_covered_ex': 10,

'binary_cache_size': 10000,

'cache_margin': 1000,

'verbose': False,

'verbose_every': 1,

'kwargs': {}}

,

'version': '0.9.6'}

, data={

'anchor': ['sepal width (cm) <= 3.40', '0.30 < petal width (cm) <= 1.30', 'sepal length (cm) > 5.75'],

'precision': 1.0,

'coverage': 0.0678,

'raw': {

'feature': [1, 3, 0],

'mean': [0.37223823246878, 0.5032467532467533, 0.818018018018018, 1.0],

'precision': [0.37223823246878, 0.5032467532467533, 0.818018018018018, 1.0],

'coverage': [0.8282, 0.6605, 0.2025, 0.0678],

'examples': [{'covered_true': array([[6.4, 2.4, 4.5, 1.5],

[5.6, 2.8, 4.1, 1.3],

[6.3, 2.7, 4.4, 1.3],

[6.4, 2.3, 4.3, 1.3],

[5.8, 2.3, 4.1, 1. ],

[6.9, 2.7, 4.9, 1.5],

[5.5, 2.4, 4.4, 1.2],

[5.5, 2.8, 4. , 1.3],

[5.7, 2.7, 4.2, 1.2],

[7. , 2.2, 4.7, 1.4]]), 'covered_false': array([[7.4, 2.5, 6.1, 1.9],

[4.9, 2.7, 1.5, 0.2],

[5.8, 2.8, 1.2, 0.2],

[5. , 2.7, 1.3, 0.3],

[6.1, 2. , 5.6, 1.4],

[7.2, 2.7, 6. , 1.8],

[4.6, 2.7, 1.4, 0.2],

[6.3, 2.5, 6. , 2.5],

[5.7, 2.2, 1.5, 0.4],

[7.2, 2.5, 5.8, 1.6]]), 'uncovered_true': array([], dtype=float64), 'uncovered_false': array([], dtype=float64)}, {'covered_true': array([[5.6, 3.4, 4.5, 1.6],

[5.8, 2.8, 4.1, 1.5],

[6.7, 3. , 4.7, 1.5],

[5.6, 2.7, 4.2, 1. ],

[6.5, 3. , 4.6, 1.2],

[5.8, 2.9, 1.2, 1.3],

[5.6, 3. , 4.2, 1.5],

[6.7, 2.4, 4.4, 1.1],

[7. , 3.2, 4.7, 1.8],

[5.5, 2.6, 4. , 1.4]]), 'covered_false': array([[4.4, 3.1, 1.3, 1.4],

[5.7, 2.5, 4.1, 1.9],

[5. , 3.2, 1.2, 1.5],

[5.8, 2.7, 4. , 1.9],

[5. , 3.1, 1.6, 1.5],

[6.8, 2.4, 5.5, 1. ],

[5.1, 3.1, 1.7, 1.8],

[4.5, 3. , 1.3, 1.4],

[6.2, 2.9, 5.4, 1.3],

[6.3, 3. , 5.6, 2.1]]), 'uncovered_true': array([], dtype=float64), 'uncovered_false': array([], dtype=float64)}, {'covered_true': array([[5.4, 2.4, 4.5, 1. ],

[5.8, 2.7, 4.1, 1. ],

[6.2, 3.3, 4.3, 0.5],

[7. , 2.7, 4.7, 1.3],

[6.1, 2.9, 4. , 1.3],

[6.2, 2.5, 4.3, 1.3],

[5.4, 3. , 4.5, 1.3],

[5.7, 2.4, 3.5, 1. ],

[5.7, 2.8, 1.5, 1.3],

[6.2, 2.6, 4.8, 1.2]]), 'covered_false': array([[5.1, 2.9, 1.9, 1.3],

[4.4, 2. , 1.4, 1. ],

[6.9, 3. , 5.7, 1.2],

[4.6, 2.2, 1.4, 1. ],

[7.7, 2.3, 6.7, 1.3],

[7.3, 2.3, 6.3, 1. ],

[5.2, 2.4, 1.5, 1.1],

[5.9, 2.5, 5.1, 1.1],

[5.8, 2.6, 5.1, 1. ],

[5.8, 2.9, 5.1, 1.3]]), 'uncovered_true': array([], dtype=float64), 'uncovered_false': array([], dtype=float64)}, {'covered_true': array([[6.2, 2.9, 1.1, 1.3],

[6. , 2.2, 4.9, 1. ],

[6.6, 2.9, 4.4, 1.3],

[6.3, 2.3, 4.5, 1.3],

[6.4, 2.9, 3.3, 1.3],

[6.7, 3.1, 4.9, 1.3],

[6. , 3.4, 1.4, 1.1],

[6.7, 3.1, 4.8, 1.1],

[6.3, 2.8, 4.2, 1.3],

[7.6, 3. , 5. , 1.3]]), 'covered_false': array([[5.8, 2.7, 5.7, 1. ],

[6.1, 2.8, 5.6, 1.3],

[5.8, 2.6, 6.1, 1.2],

[6.3, 2.5, 1.5, 0.4],

[6.4, 3.2, 5.3, 1.3],

[5.8, 2.7, 1.5, 0.5],

[6.4, 3.1, 5.1, 1.3],

[6.6, 3. , 5.7, 1.3],

[5.8, 2.7, 6. , 0.4],

[5.8, 2.7, 1.6, 0.4]]), 'uncovered_true': array([], dtype=float64), 'uncovered_false': array([], dtype=float64)}],

'all_precision': 0,

'num_preds': 1000000,

'success': True,

'names': ['sepal width (cm) <= 3.40', '0.30 < petal width (cm) <= 1.30', 'sepal length (cm) > 5.75'],

'prediction': array([1]),

'instance': array([[6.1, 2.8, 4.7, 1.2]]),

'instances': array([[6.1, 2.8, 4.7, 1.2]])}

}

)What is happening in the results, here?

First, we have the explanation meta data :-

Explanation(meta={

‘name’: ‘AnchorTabular’,

‘type’: [‘blackbox’],

‘explanations’: [‘local’],

‘params’: {…}

})

I am going to dive a little deeper into the ‘meta’ node (can be called an object too, since its a dict) under the ‘Explanation’ root node :-

- The ‘blackbox’ that you see against the key ‘type’, here, implies that the explainer sees / treats the model as a black-box (and doesn’t need to know the internals of the model).

- The ‘local’ that you see against the key ‘explanations’ implies that explanation applies to a specific instance, and not the entire model.

- Now coming to the big chunk — ‘params’ object :-

seed : Random seed for reproducibility (None means no fixed seed).

disc_perc : Percentiles used for discretizing numerical features (default: 25th, 50th, 75th percentile).

threshold : Minimum precision (confidence) required for an anchor to be accepted (default: 0.95).

delta : Precision threshold tolerance used for sampling. Controls the stopping condition for anchor search.

tau : Trade-off parameter between coverage and precision.

batch_size : Number of samples per iteration used when computing anchor coverage and precision.

coverage_samples : Number of samples used to estimate coverage of an anchor rule.

beam_size : Number of candidate anchors considered at each step. Larger values explore more rules but slow down the process.

stop_on_first : If True, stops searching as soon as a valid anchor is found (default: False, meaning it explores more candidates).

Max_anchor_size : Maximum number of features allowed in an anchor. None, shall mean no limit.

min_samples_start : Minimum number of initial samples generated to begin the search for an anchor.

n_covered_ex : Minimum number of examples that must be covered by an anchor to be considered valid.

binary_cache_size : Size of the cache for binary decisions when testing anchors. Larger cache improves efficiency.

cache_margin : Extra cache space to avoid frequent reallocation.

verbose : If True, prints detailed progress messages.

verbose_every : Frequency of printing updates (e.g., every n iterations).

kwargs : Additional arguments that can be passed to the explainer.

Next in the ‘Explanation’ node is the ‘version’ key against which, we have the value ‘0.9.6’.

The ‘version’ key basically talks about the version of Alibi being used, ensuring compatibility and reproducibility.

Next, I am gonna talk about the data object’s ‘anchor’ key.

For instance, let us look at this sample anchor key value :-

‘anchor’: [‘sepal width (cm) <= 3.40’, ‘0.30 < petal width (cm) <= 1.30’, ‘sepal length (cm) > 5.75’]

What does this tell?

So, this is actually the most important and noticeably intuitive part of the overall explanation.

So, for the instance that you had passed into the explainer (for example in our case, it is the first row of the tabular data), we got the anchor rule that suggests that as long as the :-

- sepal width is less than equal to 3.40

- petal width is greater than 0.30 and less than equal to 1.30

- sepal length is greater than 5.75

then the model is likely to predict the same class for the instance.

And while again re-explaining instance for a bit — An important thing to note here is that when I say the ‘same class for the instance’; it just means the class predicted by the model.

The instance refers to a single row of data from the tabular dataset we are referring to — the specific input example for which you want to generate an explanation.

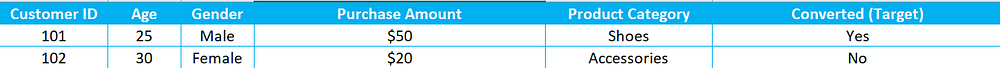

For example, if your dataset contains customer information:

If you want to explain why the model predicted “Yes” for Customer ID 101, then this specific row (Age = 25, Gender = Male, Purchase Amount = $50, etc.) is the instance being explained.

Even if other features (not included in the anchor conditions) change slightly, as long as the anchor conditions (Sepal width, Petal width, and Sepal length in this case) hold true, the model will still predict the same class.

So, if the dataset has features like petal length (just mentioning this to exemplify any other feature, and it may or may not exist in real dataset), even if petal length changes, the model will still predict the same class, provided the anchor conditions are met.

Next (and possibly the last of the stars :p ),

Precision = 1.0 would mean that every instance satisfying the anchor conditions gets the same prediction.

And, Coverage = 0.0678 means that about 6.78% of the dataset falls within this rule.

The covered_true and covered_false arrays show:-

- covered_true : Instances where the anchor rule held and the model predicted correctly.

- covered_false : Instances where the anchor rule held but the model predicted differently.

- Uncovered instances where the rule was not applicable.

I parsed this far. More in WIP.

If you have to share more sense that you are able to make out from the raw explanation, please shoot a comment.

And if you liked this write-up, please drop a like and leave a comment.

Cheers :)